Like most people in tech, I have been bombarded lately with a ton of “AI stuff”. Articles upon articles of opinions and rants. Papers and videos on various subjects from technical architectures to societal impacts and beyond.

While one must preserve a level-headed view on all these subjects and opinions, two related topics make me sad and annoyed at the same time. Namely:

- AI won’t replace people. People using AI will replace people, and,

- Prompt engineering is the future. Everyone should learn it to be competitive.

So let’s explore these two topics in a bit of detail.

People using AI will replace people not using AI

I believe there are a few reasons why this topic keeps re-appearing in all discussion threads. The most likely one though is “forced optimism”. It is hard to believe that people don’t feel anxious about these massive shifts – after all, it’s human nature to feel anxiety towards change (of any kind). It becomes easier to tell oneself “I will adapt, it will be good for me. I have control.” We, humans, tend to overestimate how much control we have in any given situation, and this is a perfect example.

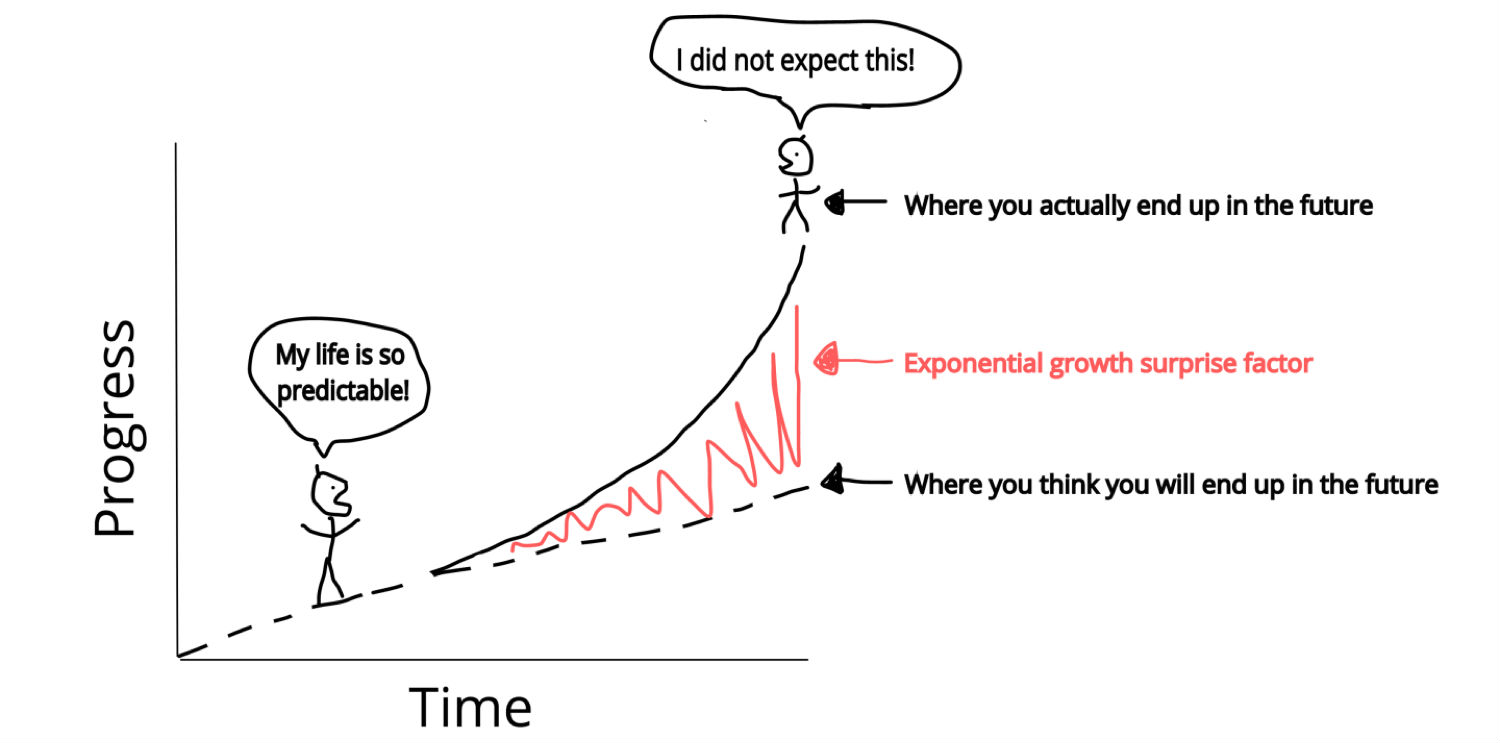

On the other hand, there is a clear underestimation of the force and speed of the changes. There have been numerous charts regarding exponential growth and linear thinking, but here’s a reminder (courtesy of Singularity Hub):

I think most people are betting that “next ChatGPT” will only be a bit better than the current version, and not much better. At this moment I cannot say which way things will go, but I will bet myself that it’s going to be much better.

And finally, I will make a somewhat objectionable comparison of this phrase to victim blaming. If you lost your job, is not because there’s no need for your services anymore, but rather - it’s your fault, you could not keep up. This is a very dangerous mode of thinking that I think does a great disservice to everyone, including the people propagating this nonsense. You should know better!

So how do we put all these things together and what can we say about this line of reasoning? Well, if you’ve been reading up until this point you already know what I am going to say: “No, AI will replace your job. Not people using AI.”. For jobs that don’t require humans for liability purposes (e.g. doctors, lawyers, licensed engineers) there’s hard to see how one using AI is better than simply AI.

I must reiterate that I am not interested in the very short-term future (1 to 2 years). It is not relevant. One cannot buy a house, save money or grow a family in 1 to 2 years. Go ahead, use AI to help you stay afloat for a couple of years. It won’t matter after that, when YOU are the slowest component of the chain and replacing YOU will improve the productivity of the entire process.

Here’s an obligatory meme that I find best relays the message:

Prompt engineering is the future

This is the most misguided thing I have seen in a long time and I wish people would be more careful about taking this seriously. I want to explore a bit the absurdity of the following statement (seen on LinkedIn):

Prompt Engineering: one of the hottest🔥 and most rewarding🤑 fields in tech

My response to the original poster was that it is not engineering and it’s not a field in tech. And don’t get me started with the rewarding part.

So why isn’t it engineering? Well, is asking a question “engineering”? Excuse me for being pedantic but from what I’ve learned it’s part of the field of “philosophy”. There is no engineering involved. And even if there is “skill” required to formulate a well-structured question for the LLM, that is not an engineering pursuit.

Now, I don’t want to linger on technicalities over the naming of this process. What interests me instead is the amount of knowledge required to formulate these questions and I would guess it’s very little. You see, calling it “engineering” creates wrong expectations. One can argue that it tries to convince people that it’s a marketable skill - a skill that they can use for employment security, and, in some cases give an advantage.

On a related note, the type of questions one asks are intimately tied to the architecture of the underlying model one uses. The alignment process used by each model provider is different and changes over time to make it better at understanding even the vaguest of questions. So this purported “skill” is nothing but a temporary adjustment in how one asks questions - a process that is bound to change often.

My advice to people is this: do not take these “skills” too seriously. I know these are uncertain times and things are changing fast. One needs to be very careful not to be left behind. But this time, it’s out of your control. One cannot do much to stay relevant and these “new skills” that are being sold to you are just red herrings.